What do deep fakes mean for politics?

The implications of AI-generated digital forgery

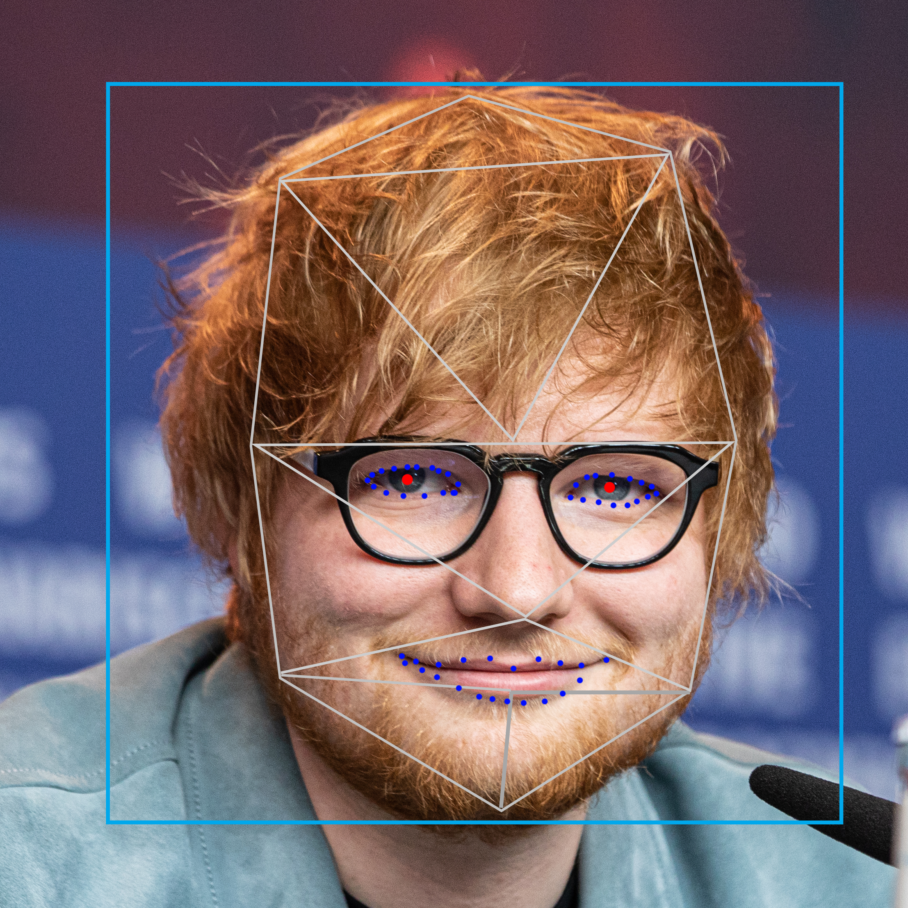

Digital content forgery technology, also known as deep fakes, are visual forgery created through neural networks, a subset of machine learning that can algorithmically transport one face to another. This Mission Impossible-esque process results in extremely convincing videos, making it difficult to separate what is real from what is fake.

Deep fake tech is one of the most worrying consequences of rapid artificial intelligence (AI) advancement. In an interview with the Brookings Institution research group Nick Dufour, a research engineer at Google, proclaimed that deep fakes “have allowed people to claim that video evidence that would otherwise be very compelling is a fake.”

Even worse, our ability to generate deep fake software is advancing much faster than our ability to detect them. The artificial intelligence sector is much less concerned with identifying fake media than they are with creating it.

Deep fakes and democracy

A 2019 deep fake of Nancy Pelosi, United States house of representatives speaker, in which she appears to be slowly stumbling through her words, went viral on YouTube and Facebook. According to the Daily Beast, the clip was generated by 34-year-old Trump supporter Shawn Brooks. The video was eventually reposted on Twitter by President Donald Trump himself with the caption: “Pelosi Stammers Through News Conference.”Although this is not the company’s first time being accused of spreading misinformation, Facebook declined to remove the clip stating they had “dramatically reduced its distribution” once the video was identified as false.

Disinformation like this has grave consequences. Since the code used to create deep fake is open source, false videos can be created by anyone from lone actors to political groups. A report by the Brookings Institution explains the numerous political implications deep fakes present: “distorting democratic discourse; manipulating elections; eroding trust in institutions; weakening journalism; exacerbating social divisions; undermining public safety; and inflicting hard-to-repair damage on the reputation of prominent individuals, including elected officials and candidates for office.”

Disinformation goes beyond U.S. politics. The art of fake news and distributing sensationalist content to impact politics is a global phenomenon. The speed at which disinformation can be created and spread through the internet, considering it is the world’s largest and most influential non-governmental actor, poses a significant threat to democracy.

“In these post-truth times, deep fakes may decimate our ability to make truth-based decisions.”

The central African country of Gabon has already seen this happen. In 2019, an alleged deep fake may have sparked an attempted coup. Beyond office, deep fakes can also have a seismic effect on political narrative.

Evidence and information are crucial for the democratic process, and deep fakes can ruin that process. People may believe what they see in deep fakes without question. This idea of “seeing is to believe” is what led computer scientist Aviv Ovayda to coin the term “reality apathy.” Reality apathy describes a potential future where no one believes any information they receive because they cannot tell the difference between truth and fiction.

The future of deep fakes

A number of cybersecurity startups are trying to tackle the issue of deep fakes. Faculty, a United Kingdom-based AI company, has generated numerous deep fakes using every open-source algorithm available. The company compiles datasets that will train systems to separate real videos from fake ones.

The reality is that machine learning detective systems will adapt quicker and better than government legislation. Debating the right to free speech is a difficult fight, and the technology is developping too quickly for politicians to handle. Faculty’s CEO proclaimed in a Financial Times interview, “It’s an extremely challenging problem and it’s likely there will always be an arms race between detection and generation.”

In the long run, deep fakes may just become another player in the cat-and-mouse game between cybercriminals and cybersecurity officers. Another measure is where major tech giants like Facebook and Twitter take more rigorous steps against sowing disinformation. However, is depending solely on private companies to solve a sociopolitical crisis effective enough? In these post-truth times, deep fakes may decimate our ability to make truth-based decisions. The question going forward should not be how to stop people from making deep fakes, but fostering a digital and political environment that actively targets disinformation.